A student from Loughborough University has designed an autonomous way-finding device for people with vision disabilities who are unable to home or have a service animal.

Recreating the role of a guide dog alongside programming quick and safe routes to destinations using real time data, the invention could be the future for people with vision disabilities.

Anthony Camu, a final year Industrial Design and Technology student, wanted to design a product that replicates a guide dog’s functions for visually impaired people that fall into the latter category.

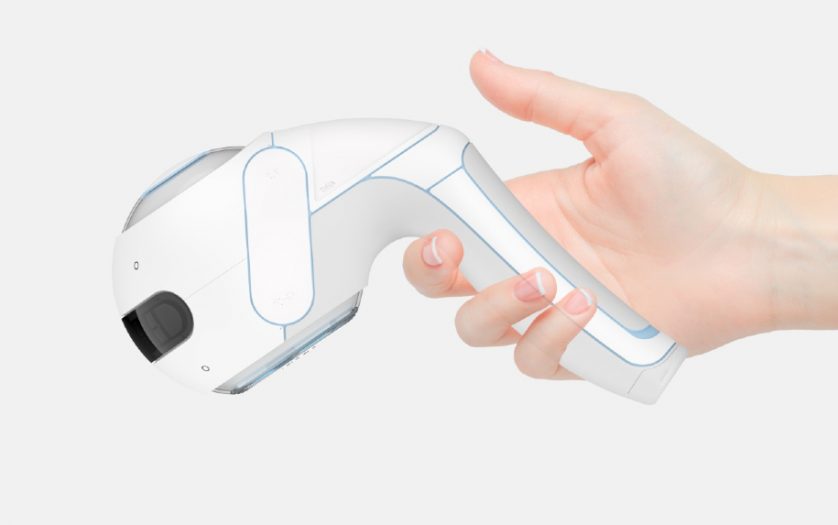

Inspired by virtual reality gaming consoles, he has conceptualised and started to prototype ‘Theia’, a portable and concealable handheld device that guides users through outdoor environments and large indoor spaces with very little user input.

In essence, it’s handheld robotic guide dog – minus the waggy tail.

Inspired by autonomous vehicles, Theia aims to translate that sense of effortless driving into a system of effortless walking, helping users make complex manoeuvres without needing to see nor think.

Anthony has successfully created prototypes that feature the CMG technology.

The prototypes were used to experiment with momentum to manipulate the movement of one’s hand.

Although the project is in its infancy and has issues such as excessive vibration and breaking motors, the potential is there.

Anthony is hoping to build on his design and produce more prototypes by working with design engineers and programmers – perhaps even founding a start-up company and launching a crowdfunding campaign.