Most people recognize the white cane as a simple-but-indispensable device that helps people with vision disabilities navigate the world.

Tech Xplore reports that a team of Stanford University researchers have developed a cost-conscious robotic cane that steers people with vision disabilities safely and efficiently through their surroundings.

Using tools from autonomous vehicles, the research team built the augmented cane to help people detect, identify and maneuver around barriers, and follow paths both indoors and out.

The augmented cane is not the first smart cane, but sensor canes can be cumbersome and costly—weighing almost 50 pounds with a price tag of approximately $6,000. Current sensor canes have limited technology capabilities, only sensing objects directly in front of the user. The augmented cane features leading-edge sensors, weighs a mere 3 pounds, can be built at home from off-the-shelf parts, has free, open-source software, and costs $400.

Researchers are hopeful their cane will be an inexpensive and practical device for the more than 250 million people with vision disabilities worldwide.

“We wanted something more user-friendly than just a white cane with sensors,” said Patrick Slade, a graduate research assistant in the Stanford Intelligent Systems Laboratory and first author of a paper published in the journal Science Robotics describing the augmented cane.

“Something that cannot only tell you there’s an object in your way, but tell you what that object is and then help you navigate around it.” The paper includes a downloadable parts list and DIY solder-at-home instructions.

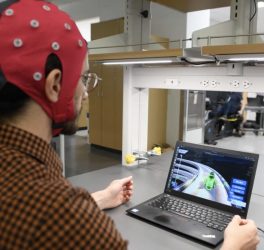

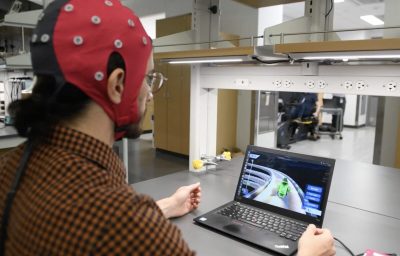

The augmented cane is furnished with a LIDAR sensor. LIDAR is the laser-based technology used in some self-driving cars and aircraft that measures the distance to impending obstacles. The cane has additional sensors including GPS, accelerometers, magnetometers, and gyroscopes, like those on a smartphone, that track the user’s position, speed, direction, among others. The cane makes decisions using artificial intelligence-based way finding and robotics algorithms like simultaneous localization and mapping (SLAM) and visual servoing—steering the user toward an object in an image.

“Our lab is based out of the Department of Aeronautics and Astronautics, and it has been thrilling to take some of the concepts we have been exploring and apply them to assist people with blindness,” said Mykel Kochenderfer, an associate professor of aeronautics and astronautics and an expert in aircraft collision-avoidance systems and senior author on the study.

Mounted at the tip of the cane is the centerpiece of the whole device —a motorized, omnidirectional wheel that stays in contact with the ground. This wheel leads the user by gently tugging and nudging, left and right, around barriers. Furnished with built-in GPS and mapping capabilities, the augmented cane can even guide its user to specific locations—like a favorite store in the mall or a local coffee shop.

In real-world tests with users that volunteered through the Palo Alto Vista Center for the Blind and Visually Impaired, people with vision disabilities as well as sighted people who were blindfolded were asked to navigate hallways, avoiding obstacles, and travelling to outdoor locations.

“We want the humans to be in control but provide them with the right level of gentle guidance to get them where they want to go as safely and efficiently as possible,” Kochenderfer said.

Results showed that the augmented cane performed exceptionally well. It increased the walking speed for participants with vision disabilities by about 20 percent over the white cane alone. For sighted people wearing blindfolds, the results were more outstanding, boosting their speed by more than a third. An increased walking speed is related to healthier quality of life, Slade noted, so the hope is that the device could improve the quality of life of its users.

The team are open-sourcing every facet of the project. “We wanted to optimize this project for ease of replication and cost. Anyone can go and download all the code, bill of materials, and electronic schematics, all for free,” Kochenderfer said.

“Solder it up at home. Run our code. It’s pretty cool,” Slade added.

But Kochenderfer emphasied the cane is still at its prototype stage. “A lot of significant engineering and experiments are necessary before it is ready for everyday use,” he said, adding that the team would love to partner with those in industry who could streamline the design and heighten production to make the augmented cane even more affordable.

The next steps for the team involve adjusting their prototype and developing a model that can use a smartphone as the processor, an advancement that could enhance functionality, expand access to the technology, and further decrease costs.