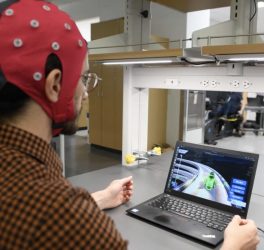

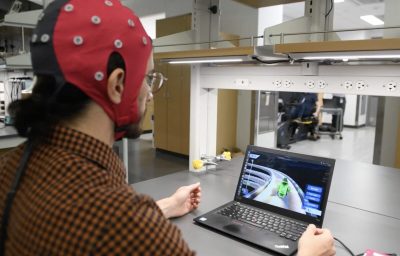

Harvard University students developed a wearable robotic device for people who are blind or have low vision to navigate more easily.

Students has launched a startup called Foresight, a wearable navigation aid for people who are blind that uses cutting-edge soft robotics and computer vision technology. Foresight connects to a user’s smartphone camera, which is worn around the neck. It detects objects nearby and this information triggers soft textile units on the body that inflate to provide haptic feedback as objects approach and pass.

“Being diagnosed with a permanent eyesight problem means getting used to a whole new way of navigating the world,” said Ed Bayes, a student in Harvard’s MDE program.

“We spoke to people living with visual impairment to understand their needs and built around that.”

The startup was born out of the joint SEAS/GSD course Nano Micro Macro, in which teams of students are challenged to apply emerging technology from Harvard labs. Foresight was inspired by students’ experience with blind family members who spoke of the stigma surrounding the use of assistive devices.

“Importantly, Foresight is discreet, affordable, and intuitive. It provides an extra layer of comfort to help people move around more confidently,” Bayes added.

“Most wearable navigation aids rely on vibrating motors, which can be uncomfortable and bothersome to users,” said Anirban Ghosh, M.D.E. ’21. “Soft actuators are more comfortable and can provide the same tactile information.”

With Foresight, the distance between an object and the user correlates with the amount of pressure they feel on their body from the actuators.

“The varied inflation of multiple actuators represents the angular differences of where those objects are in space,” said Nick Collins,

“We want to know if the information we are giving them actually translates into a user-friendly interpretation of objects in the space around them,” Collins said. “This is another tool in their arsenal. Our desire isn’t necessarily to get rid of any current tools, but to provide another, more robust sensory experience.”

The team is inspired by the opportunity to use emerging technology to provide an implementable solution that could help many people.