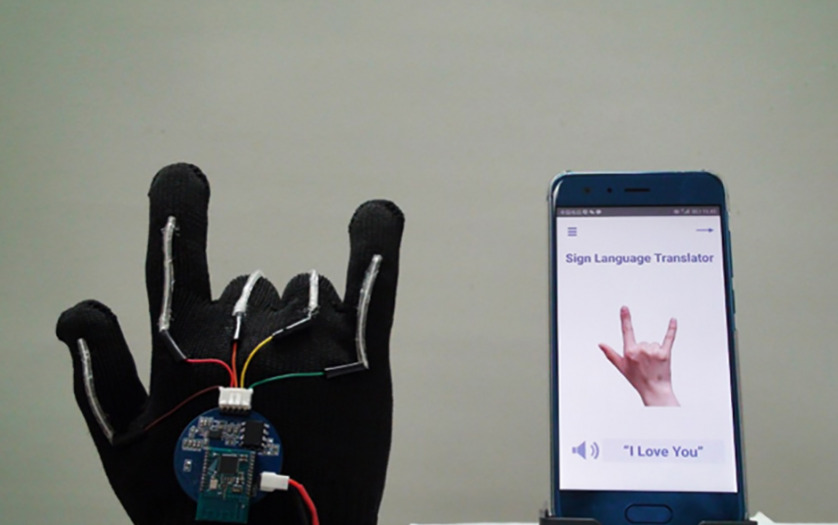

UCLA scientists have created a glove that translates American Sign Language into speech in realtime through a smartphone app.

“Our hope is that this opens up an easy way for people who use sign language to communicate directly with non-signers without needing someone else to translate for them,” said Jun Chen, an assistant professor of bioengineering at the UCLA Samueli School of Engineering and the principal investigator on the research. “In addition, we hope it can help more people learn sign language themselves.”

The system includes a pair of gloves with thin, stretchable sensors that run the length of each of the five fingers. These sensors, made from electrically conducting yarns, pick up hand motions and finger placements that stand for individual letters, numbers, words and phrases.

The device then turns the finger movements into electrical signals, which are sent to a dollar-coin–sized circuit board worn on the wrist. The board transmits those signals wirelessly to a smartphone that translates them into spoken words at the rate of about a one word per second.

The researchers also added adhesive sensors to testers’ faces — in between their eyebrows and on one side of their mouths — to capture facial expressions that are a part of American Sign Language.

Previous wearable systems that offered translation from American Sign Language were limited by bulky and heavy device designs or were uncomfortable to wear, Chen said.

The device developed by the UCLA team is made from lightweight and inexpensive but long-lasting, stretchable polymers. The electronic sensors are also very flexible and inexpensive.