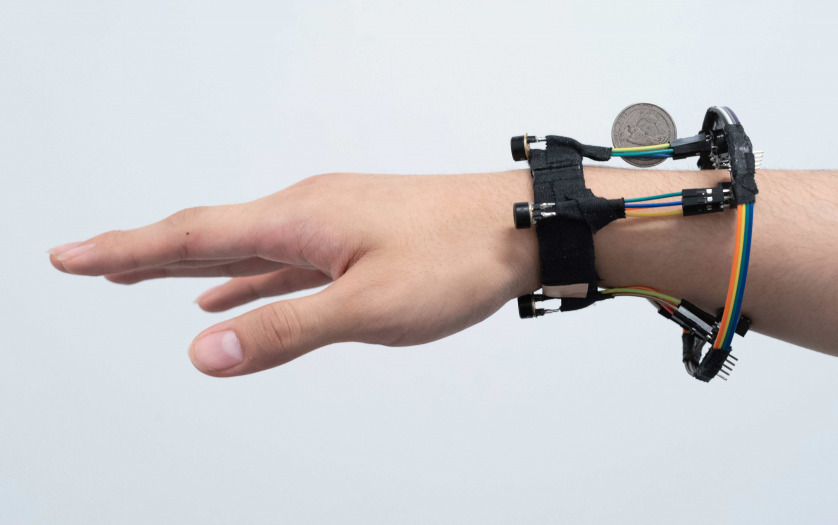

Researchers at the University of Wisconsin–Madison and Cornell University have developed a wrist-mounted device that accurately tracks finger and hand movements using four tiny cameras.

The bracelet helps solve a difficult technological problem — tracking the human hand — and has potential applications for translating sign language, virtual reality, mobile health and interactions between humans and robots. The researchers dubbed their device FingerTrak. It can sense and translate into 3D the many positions of the human hand, including 20 finger joint positions.

Cheng Zhang, a professor of information sciences at Cornell University, led the work in collaboration with Yin Li, a professor of biostatistics and medical informatics at the UW School of Medicine and Public Health. Li contributed to the software underlying FingerTrak.

The researchers published their work in June in the Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies. It also will be presented at the 2020 Association for Computing Machinery International Joint Conference on Pervasive and Ubiquitous Computing, taking place virtually Sept. 12-16.

Each of the bracelet’s four small cameras, about the size of a pea, snap multiple silhouette images to form an outline of the hand. A deep neural network then stitches these silhouette images together and reconstructs the virtual hand in three dimensions. Through this method, the researchers were able to capture the entire hand pose, even when the hand is holding an object.

Each of the bracelet’s four small cameras, about the size of a pea, snap multiple silhouette images to form an outline of the hand. A deep neural network then stitches these silhouette images together and reconstructs the virtual hand in three dimensions. Animation courtesy of Cornell University.

In addition to potential applications in sign language or virtual reality, Li says accurate measurements of hand motions could improve disease diagnosis.

“How we move our hands and fingers often tells about our health condition,” Li says in a Cornell news release. “A device like this might be used to better understand how the elderly use their hands in daily life, helping to detect early signs of diseases like Parkinson’s and Alzheimer’s.”